The AGI Race Is Perniciously Pro-Extinctionist: Here Are the Receipts

TESCREALists imagine a future in which our species goes extinct by being replaced by posthumans

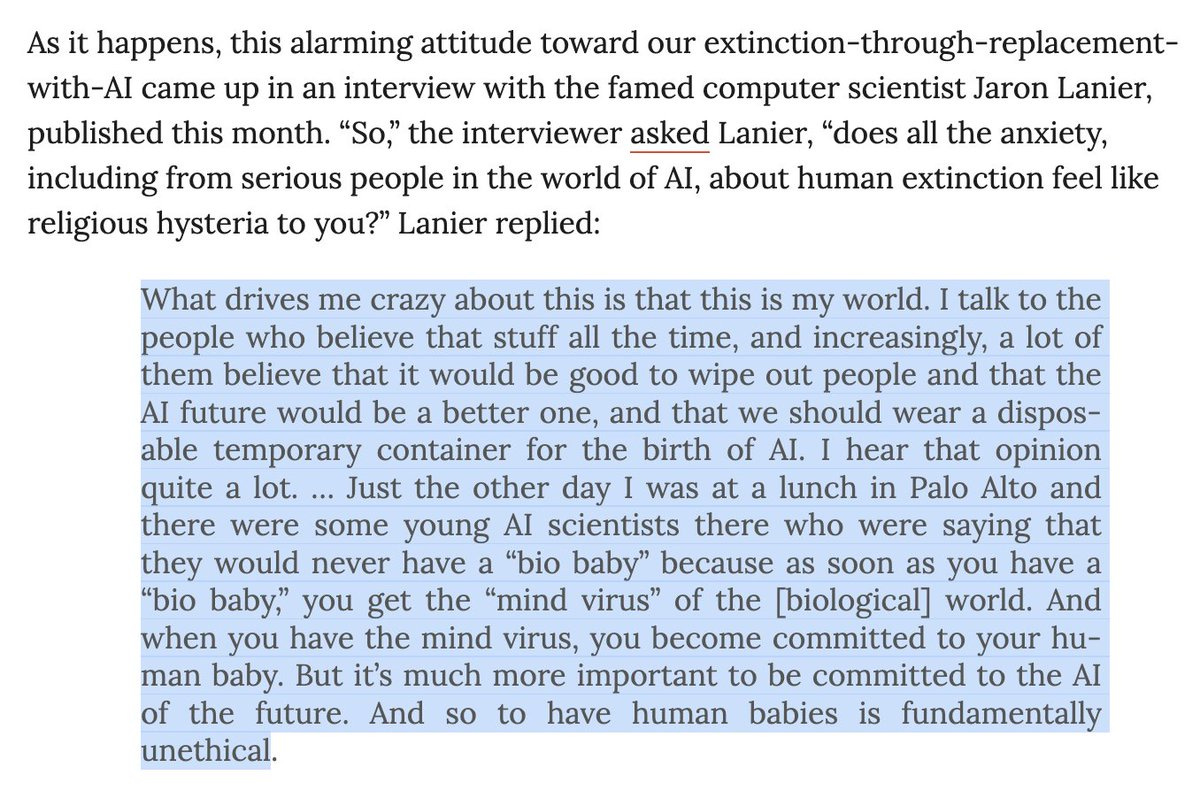

If you’re not on X, congratulations. To those of us whose masochistic proclivities exceed our wisdom, you might find a recent thread on X to be of interest. It provides some examples, in the form of video clips and excerpted texts, of a frightening phenomenon that I’ve been screaming about — mostly into the void — for years: at the heart of the TESCREAL worldview is a pro-extinctionist stance that positively hopes our species will go extinct in the near future. Many notable figures within the TESCREAL movement are explicit that Homo sapiens should be replaced, in the coming years or decades, by a new posthuman species. Others are less outright about this, but would still say that if our species were to vanish once posthumanity arrives, so much for the better. In an academic article titled “On the Extinction of Humanity,” forthcoming in Synthese, I call this latter view “extinction neutralism” (sorry, us philosophers have a predilection for introducing obscure, idiosyncratic phraseology because it makes us sound more clever than we actually are — well, maybe I should speak only for myself here!).

So: the large majority of TESCREALists fall somewhere on the spectrum between extinction neutralism and pro-extinctionism, with numerous notable figures explicitly endorsing the latter. Yikes. We must, therefore, recognize that TESCREALism poses a direct threat to our collective survival and flourishing that is no less — and probably much more — significant than nuclear conflict, global pandemics, climate change, and asteroid impacts. In fact, TESCREALism may be making these threats worse, e.g., because TESCREALists believe that ASI (artificial superintelligence) is going to usher in a utopia, or what the pseudo-profound Nick Bostrom calls a “solved world,” and so they’re racing ahead with this even though they understand that LLMs are nontrivially exacerbating the rapidly deteriorating climate predicament. I hate this timeline that we all somehow got trapped in without our consent!

For those wiser than me, who aren’t on X, here’s the X thread of videos and texts that demonstrate the pro-extinctionist tendencies of these anti-human extremists. I should add, as I’ve said elsewhere (and in the thread below), that one shouldn’t be misled by their duplicitous rhetoric: TESCREALists are among the loudest voices today shouting that we must avoid “human extinction,” but they define “human” as encompassing our species plus whatever descendants we might have. This is sneaky, because it means that our species could die out — entirely and forever — five years from now without “human extinction” having occurred. So long as we are replaced by the right kind of digital posthumans or ASIs, “humanity” would live on. But if we define “humanity” as denoting our species, as I think we should, then these people couldn’t care less about “human extinction” — some even want it to happen.

Here’s the thread. I’d love to know what you think! If I’m wrong about something, please let me know. :-)

PS. I’ll make an official announcement about this in a month or so, but for now: I’m leaving academia at the end of this year, perhaps at least temporarily but perhaps permanently, to try my luck as a freelance journalist focused on AI, Silicon Valley ideologies, politics, and human extinction. If you would like to support my work, I would very greatly appreciate it! (But, of course, no obligation!) You can find me on Patreon here: https://www.patreon.com/c/xriskology. Now, to the X thread!

I can't stress enough that the whole push to build AGI & the TESCREAL worldview behind it is fundamentally pro-extinctionist. If there is one thing people need to understand, it's this. Here's a short thread explaining the idea, starting a this clip of Daniel Kokotajlo:

Here's Eliezer Yudkowsky, whose views on "AI safety" have greatly influenced people like Kokotajlo, saying that he's not worried about humanity being replaced with AI posthumans--he's worried that our replacements won't be "better."

In this clip, Yudkowsky says that in principle he'd be willing to "sacrifice" all of humanity to create superintelligent AI gods. We're not in any position to do this right now, but if we *were*, he'd push the big red button.

The clip above comes from a conversation with Daniel Faggella, who explicitly says that we should create our successors--or what he calls our "Worthy Successors": AIs that take over the world and replace humanity. This is the ultimate "moral aim" of building AGI, he claims.

The influential computer scientist Richard Sutton says something similar, arguing that the succession to AI is inevitable and that we should welcome it:

Sutton explicitly cites Hans Moravec, who describes himself as "an author who cheerfully concludes that the human race is in its last century, and goes on to suggest how to help the process along." Moravec has played a significant role in the development of TESCREALism.

Larry Page, the cofounder of Google (!), says that AI is the natural next step in cosmic evolution, and that we should sit back and let them take over the world. Google owns DeepMind, one of the leading companies trying to create AGI.

Elon Musk himself is pushing hard to build AGI with his own company xAI, and claims that virtually all “intelligence” in the future will be artificial--not biological. Our intelligence could simply serve as a "backstop" for digital intelligence.

Another noted AI researcher, Jürgen Schmidhuber, says that "humans will not remain the crown of creation. ... But that's okay." Um, is it?

The leading TESCREALists Nick Bostrom and Toby Ord say something similar: we must create or become "posthumans" in order to fulfill our "long-term potential" in the universe. This is not negotiable: Homo sapiens ain't the future, digital posthumans are.

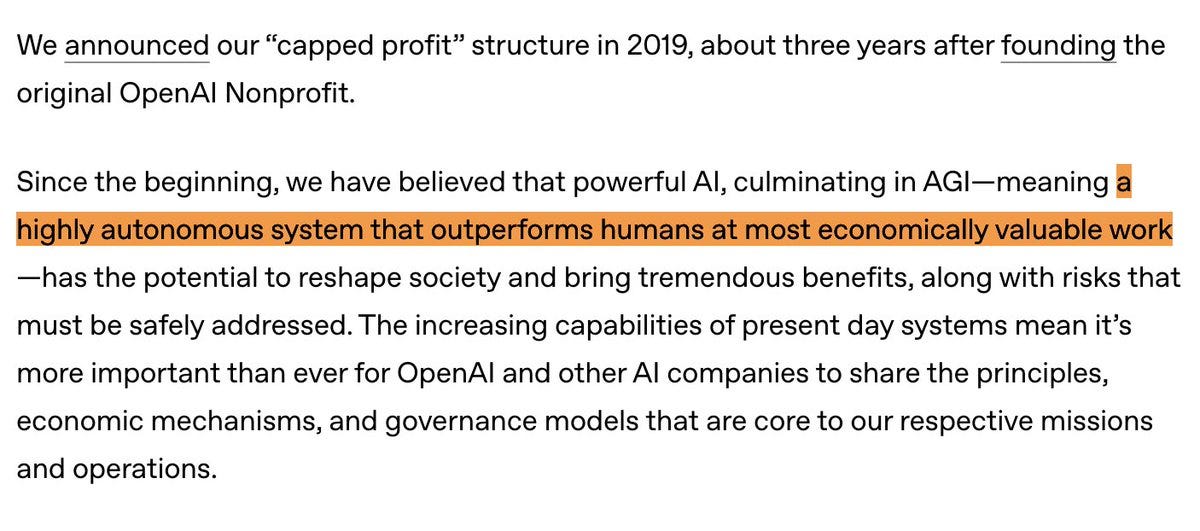

OpenAI even defines "AGI" as a system that could replace most humans in the economy. And what happens when humans become economically superfluous in a capitalist system? Why keep us around? The question is rhetorical.

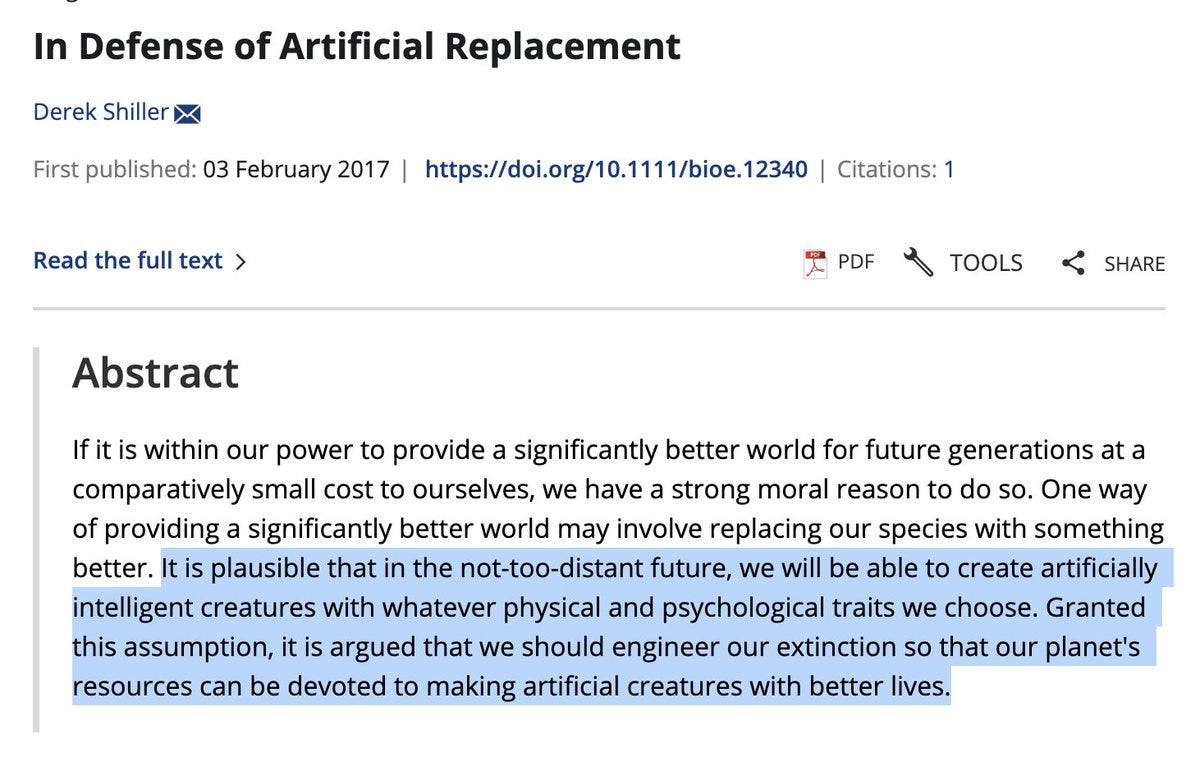

The EA longtermist Derek Shiller argues that "we should engineer our extinction so that our planet's resources can be devoted to making artificial creatures with better lives." Yes, these people are serious about this: jettison humanity from the ship of existence. Buh-bye!

Adam Kirsch wrote an entire book about the anti-humanist/pro-extinctionist attitudes among transhumanists, titled "The Revolt Against Humanity." It's worth a read:

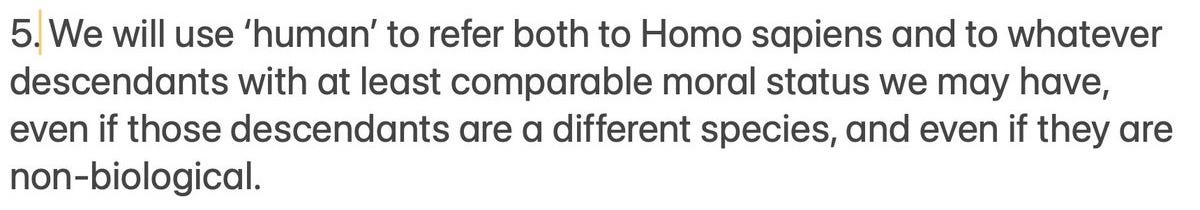

What's imperative to understand is that, as Kokotajlo alludes to, the way these people typically define "humanity" or "humans" is NOT the way everyone else defines the term. They define it as "our species AND whatever successors we might have." For example, MacAskill co-writes:

Similarly, Ord and Bostrom write in separate publications:

This is very *very* important to understand because it implies that our species, Homo sapiens, could disappear, be annihilated, or simply fade out of existence WITHOUT "human extinction" having occurred. *So long as* we are REPLACED by a successor species of AIs or some other digital posthuman, "humanity" will persist.

This is the sneaky little trick that they play. It allows them to claim that they OPPOSE "human extinction" when, in fact, they are actually promoting, explicitly or implicitly, the demise of our species in favor of a new posthuman species. If you understand "humanity" as denoting our species, then all of these ideologies--transhumanism, longtermism, accelerationism, and other TESCREAL-related views--are pro-extinctionist either explicitly or in practice. Do not be fooled by this: you and I have no place in the future.

But it's even worse than that! Many of these people couldn't care less about whether the biological world as a whole--including our nonhuman companions on Earth--survive the "utopia" they're creating. Here's William MacAskill on our systematic obliteration of the biosphere:

At the heart of TESCREALism is a deeply poisonous eschatological vision according to which the future is digital, not biological. And not just the future, but the NEAR future since they hope to catalyze the transition to a new digital world very soon--within our lifetimes.

I have written about this on numerous occasions. Here are two examples from Truthdig: https://www.truthdig.com/articles/the-endgame-of-edgelord-eschatology/ AND https://www.truthdig.com/articles/team-human-vs-team-posthuman-which-side-are-you-on/.

As I write in the first, when we list global threats to our survival, we should include TESCREALism right there alongside thermonuclear conflict, global pandemics, catastrophic climate change, asteroid impacts, and so on. In fact, TESCREALism probably poses a far greater threat than these other hazards. Another good article on this general topic comes from Nalmi Klein and Astra Taylor: https://www.theguardian.com/us-news/ng-interactive/2025/apr/13/end-times-fascism-far-right-trump-musk.

These people and their ideologies should frighten you, as they oppose all that is good and worthwhile on this marvelous little spaceship called Earth. There is no Planet B, yet they are destroying our Planet A to fulfill their pernicious eschatological fantasies. Fight back, now.

Here's one final example for you, courtesy of Vox:

As you say, these left brain lunatics are perhaps the most serious threat to biological life. So then by the principles of Longtermism., the best thing we bio-units can do for our long term survival is to delete all the Longtermists as quickly as possible.

Logical yes?