“Who is going to tell the billionaires in their underground bunkers what to do when the singularity comes knocking? We haven’t even colonised Mars yet and already the future is past.”—Dr. Alexander Thomas, a critic of TESCREALism, speaking sarcastically after hearing that FHI is closing.

As many of you have heard, the Future of Humanity Institute (FHI) shut down on April 16. Because I care about the future of humanity, I believe this is a very good thing.

Here are some hastily written thoughts on the matter:

Background: Oxford University had been frustrated with FHI for years. The Philosophy Faculty “imposed a freeze on fundraising and hiring” in 2020. I’m told by sources—though I have not independently verified these claims—that Bostrom’s racist email and his abysmal “apology” for that email were the final straw. After the email scandal broke in early 2023 (I first stumbled upon the email in December of 2022, and then contacted FHI to verify its authenticity), Nick Bostrom apparently took leave to finish his book Deep Utopia, which appears to mostly be a flop so far. After Bostrom returned, Oxford gave FHI the boot.

I’m told that there was some discussion about Anders Sandberg taking over, though for reasons that aren’t clear that was vetoed. Also worth noting that Bostrom is no longer a faculty member at Oxford.

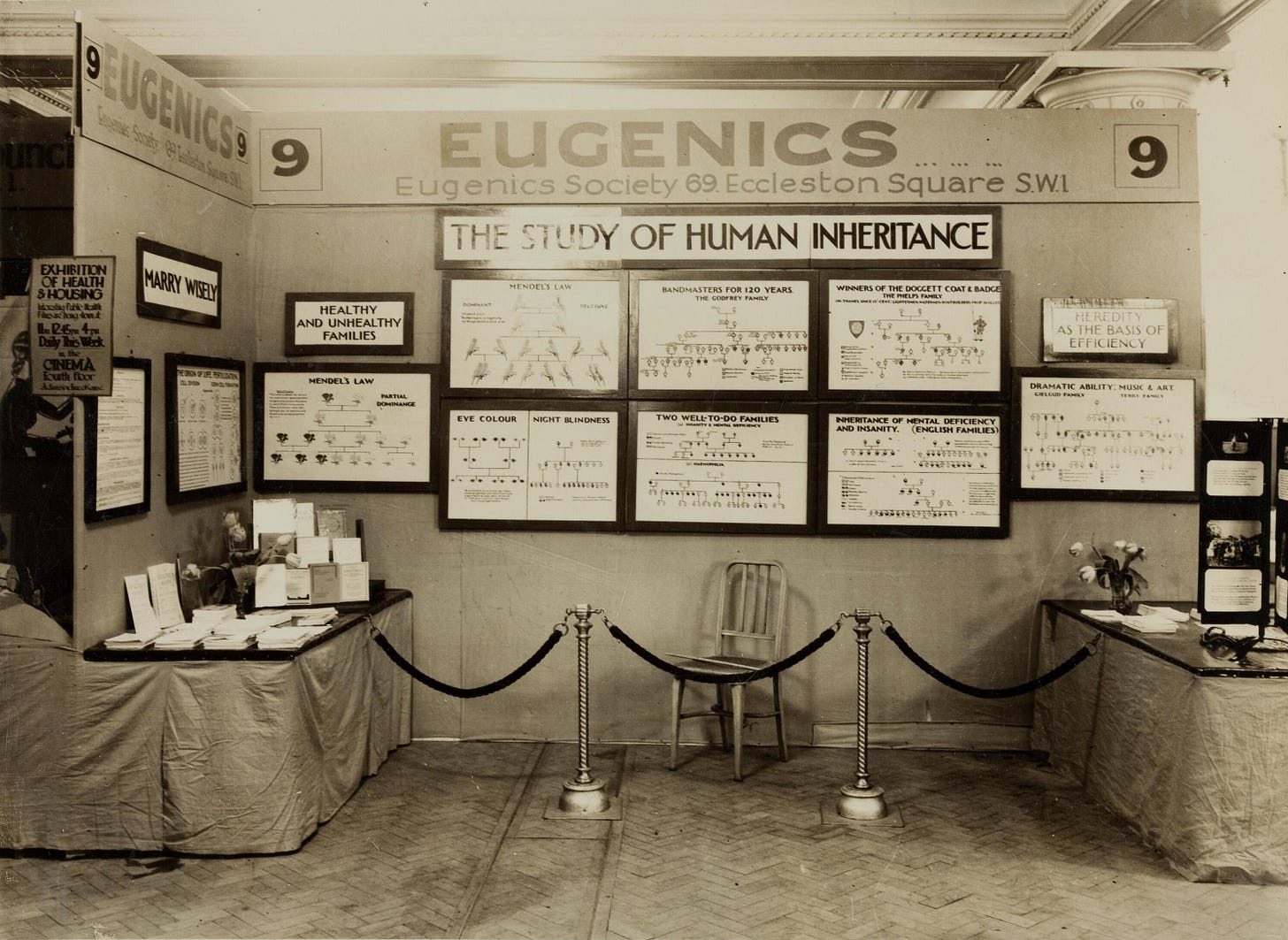

Bostrom founded FHI in 2005, in large part to advance the neo-eugenics project of transhumanism.

This is pretty much all I know about the situation. I was told several months ago that FHI would be closing, though it seems that some folks affiliated with FHI only found out after April 16.

Entitlement: A “final report” about FHI, written by Sandberg, states:

While FHI had achieved significant academic and policy impact, the final years were affected by a gradual suffocation by Faculty bureaucracy. The flexible, fast-moving approach of the institute did not function well with the rigid rules and slow decision-making of the surrounding organization. (One of our administrators developed a joke measurement unit, “the Oxford”. 1 Oxford is the amount of work it takes to read and write 308 emails. This is the actual administrative effort it took for FHI to have a small grant disbursed into its account within the Philosophy Faculty so that we could start using it—after both the funder and the University had already approved the grant.)

What strikes me about this passage is the stunning entitlement. FHI wanted to leech off of Oxford’s prestige, while at the same time, it seems, operating outside of its rules and norms. Yes, universities are too conservative, and the institutional inertia can be frustrating. But then why didn’t FHI just leave Oxford, then? After all, the institute has direct connections to multiple billionaires who would no doubt have funded it.

If you want to benefit from Oxford’s name and reputation, then play by the rules—that’s what everyone else does. Heck, so far as I know, FHI folks like Bostrom, Sandberg, Toby Ord, and others, had no teaching duties at all. They did not have to grade papers, to answer emails from students. They were paid by one of the best universities in the world to sit around and ponder the “big questions” of life, the universe, and everything. This is probably one of the most privileged job situations that anyone in academia could end up in—and yet Sandberg complains about having to read and write 308 emails to get a grant disbursed. Please! That’s how many emails most professors have to read and write to students and administrators every couple of weeks throughout an entire semester! In many ways, Oxford was incredibly good to FHI—and yet Sandberg throws them under the bus in his “report” rather than thanking Oxford for everything it did to support FHI over the years. Unbelievable.

Irony: FHI helped to found the longtermist movement. A central task of longtermism is to anticipate and mitigate risks that threaten to destroy humanity’s long-term future. It’s ironic, then, that the longtermist movement has been consistently bad at anticipating and mitigating risks that threaten to destroy its own future.

Longtermists failed to anticipate and mitigate the risks posed by Sam Bankman-Fried, despite ample warning that Bankman-Fried is a dishonest and unethical person (Bankman-Fried even once described DeFi as, basically, a Ponzi scheme—and none of the longtermist leadership cared).

Longtermists failed to anticipate and mitigate the negative press that buying a huge castle in Oxfordshire would cause: Wytham Abbey, which they just recently had to sell (another womp-womp).

Longtermists failed to anticipate and mitigate the backlash that (a) Bostrom’s “apology” for his racist email and (b) Sandberg’s attempt to excuse Bostrom’s offensive language, would generate. (Making matters worse, Bostrom appears to have referred to social justice advocates as “bloodthirsty mosquitos” on his personal website after this snafu. See below.)

Longtermists weren’t even able to keep FHI from being shut down.

How much evidence do we need that longtermists aren’t good at anticipating and mitigating risks? Just about all of the Big Scandals since 2022 have been caused by their own foolish actions—these are “own goals,” instances of someone punching themselves in the face. And yet they want to control how advanced AI gets developed because they believe they have some special esoteric insights about the hypothetical risks posed by this technology, and how best to mitigate these highly speculative future risks? Come on.

The “Future of Humanity Institute” was a misnomer. The institute’s name suggests that its goal was to study the future of humanity. No, its goal was to advance an extremely narrow, deeply impoverished, highly problematic vision of what the future ought to look like: a techno-utopian world in which we radically reengineer humanity, spread beyond Earth, plunder what longtermists call our “cosmic endowment” of resources, and build literally planet-sized computers in which trillions and trillions of “happy” digital people can live. This is just techno-capitalism on steroids, and indeed Bostrom (in 2013) even defined “existential risk” as any event that would prevent us from reaching a stable state of “technological maturity,” i.e., a state in which we have fully subjugated nature and maximized economic productivity.

The utopian vision that FHI developed is thoroughly Western, Baconian, and capitalistic, and hence it does not represent the interests of most of humanity. It was designed almost entirely by extremely privileged white men at elite universities (Oxford, Cambridge) and in Silicon Valley, and consequently it reflects their particular views of the world—not the views of humanity more generally.

Indeed, one of the most striking features of the FHI/TESCREAL literature is the conspicuous absence of virtually any reference whatsoever to what the future could or, more importantly, should look like from the perspectives of Indigenous communities, Afrofuturism, feminism, Queerness, Disability, Islam, and other non-Western perspectives and thought traditions—or even the natural world. Heck, Bostrom’s colleague William MacAskill even argues in What We Owe the Future that our ruthless destruction of the environment might be net positive: wild animals suffer, so the fewer wild animals there are, the less wild-animal suffering there will be. It is, therefore, not even clear that nonhuman organisms have a future in the techno-utopian world of TESCREALism.

The “utopian” vision of FHI looks like an utter dystopian nightmare from most other perspectives. Indeed, this is why I have argued that avoiding an “existential catastrophe” would be catastrophic for most of humanity: by definition, avoiding an existential catastrophe means realizing the techno-utopian visions of Bostrom, MacAskill, and the other longtermists. But these visions, if realized, would further marginalize the marginalized, if not completely eliminate such groups. Indeed, since transhumanism—which aims to create a “superior” new “posthuman” species—is central to fully realizing the longtermist utopian vision, avoiding an existential catastrophe would likely result in the extinction of our species. (See this article of mine for a more detailed discussion.)

Because I do not advocate bringing about the extinction of our species, and because FHI was peddling and promoting a worldview in which this would be the likely outcome, I am glad that FHI is shutting down—precisely because I care about “the future of humanity.”

Folks associated with FHI seemed to think of themselves as particularly deep thinkers. But when one zooms out a bit and realizes that there are whole universes of thought about humanity’s future that FHI completely ignores—because they don’t fit the white, male, Western perspective that FHI embodies—these folks actually look like extremely shallow thinkers.

Reputational harms. In many ways, FHI has been a huge net negative. It has, I would argue, set-back the field of Futures Studies—my disciplinary home. Consider this tweet from Dr. Timnit Gebru, which expresses a feeling that many people have had since longtermism started showing up everywhere in mid-2022:

There is no reason that these terms should make anyone “cringe,” and yet FHI—in large part—has succeeded in doing just that.

Relatedly, consider a topic that I’m passionate about: the ethics of human extinction. Over the past decade, this field has been completely dominated by longtermism—a very specific and narrow approach to the topic. How has longtermism come to dominate this field? In part because of all the funding that longtermist organizations have received from tech billionaires, including Sam Bankman-Fried.

This is a real shame because longtermism is just one of many ways of thinking about the ethics of human extinction. Yet when most people hear of “the ethics of human extinction,” they often immediately think of “existential risks” and “longtermism.” And because most philosophers think that “existential risks” and “longtermism” are complete nonstarters, they immediately dismiss the broader field of the ethics of human extinction (the topic of Part II in my latest book). Consequently, I think that longtermism has done real damage to and impeded progress within this field.

(Note that, in an effort to rectify this problem, I am starting an “ethics of human extinction” reading group, which will explore all the various positions of the field, not just longtermism. Dr. Roger Crisp will be our first speaker on May 3.)

More on Bostrom: FHI was, as noted, founded by Bostrom. For some who have forgotten, here are a few highlights from things that he’s written:

(1) Bostrom One type of existential risk, he writes, is:

“Dysgenic” pressures

It is possible that advanced civilized society is dependent on there being a sufficiently large fraction of intellectually talented individuals. Currently it seems that there is a negative correlation in some places between intellectual achievement and fertility. If such selection were to operate over a long period of time, we might evolve into a less brainy but more fertile species, homo philoprogenitus (“lover of many offspring”).

However, contrary to what such considerations might lead one to suspect, IQ scores have actually been increasing dramatically over the past century. This is known as the Flynn effect; see e.g. It’s not yet settled whether this corresponds to real gains in important intellectual functions.

Moreover, genetic engineering is rapidly approaching the point where it will become possible to give parents the choice of endowing their offspring with genes that correlate with intellectual capacity, physical health, longevity, and other desirable traits.

In any case, the time-scale for human natural genetic evolution seems much too grand for such developments to have any significant effect before other developments will have made the issue moot.

In fact, eugenics and race science are pretty much everywhere one looks within the longtermist and existential risk movement, as discussed more here.

- Trivializing and minimizing the worst disasters and atrocities in human history:

Our intuitions and coping strategies have been shaped by our long experience with risks such as dangerous animals, hostile individuals or tribes, poisonous foods, automobile accidents, Chernobyl, Bhopal, volcano eruptions, earthquakes, draughts, World War I, World War II, epidemics of influenza, smallpox, black plague, and AIDS. These types of disasters have occurred many times and our cultural attitudes towards risk have been shaped by trial-and-error in managing such hazards. But tragic as such events are to the people immediately affected, in the big picture of things – from the perspective of humankind as a whole – even the worst of these catastrophes are mere ripples on the surface of the great sea of life. They haven’t significantly affected the total amount of human suffering or happiness or determined the long-term fate of our species.

- The collapse of global civilization would be “a small misstep for mankind,” so long as we could bounce back and eventually reach the stars:

What distinguishes extinction and other existential catastrophes is that a comeback is impossible. A non-existential disaster causing the breakdown of global civilization is, from the perspective of humanity as a whole, a potentially recoverable setback: a giant massacre for man, a small misstep for mankind.

- On pages 465-466 of this paper, Bostrom takes seriously the idea of invasive, global mass surveillance of everyone on the planet to prevent “civilizational devastation.” He also spoke about this in a TED Q&A, where he made clear that he’s dead serious about this.

- Here’s what he wrote in the aftermath of the racist email scandal:

Hunkering down to focus on completing a book project (not quite announcement-ready yet). Though sometimes I have the impression that the world is a conspiracy to distract us from what's important - alternatively by whispering to us about tempting opportunities, at other times by buzzing menacingly around our ears like a swarm of bloodthirsty mosquitos.

It’s pretty clear that the “swarm of bloodthirsty mosquitos” is a reference to the folks who were upset with his “apology” for writing (quoting him) that “Blacks are more stupid than whites,” after which he mentioned the N-word. In his “apology,” he said that he regretted writing the N-word, but didn’t walk back his unscientific and ethically abhorrent claim that certain “racial” groups might be less “intelligent” than other groups.

Conclusion. I am not sad that FHI has shut down. The community that coalesced around FHI was deeply toxic, as I explore here, and its techno-utopian fantasies are not only dangerous but extremely impoverished.

When FHI folks talk about the “future of humanity,” they aren’t talking about the future of all of humanity. They’re talking about realizing a very particular quantitative, colonialist, expansionist, Baconian, capitalistic vision, which they hope to impose on everyone else, whether others—you and I—like it or not. How can one be a serious futurist if one isn’t even curious about alternative visions of the future? (That’s a rhetorical question.)

If FHI closing undermines the massive influence of its techno-futurist ideology (especially within Silicon Valley), this would be good news for humanity’s collective future.

Finally, it seems that many accelerationists (a variant of the TESCREAL movement) see the end of FHI as a win for their ideological project. That may be the case, though the accelerationist project is even more reckless, impoverished, unsophisticated, and dangerous than longtermism—in my opinion.

Farewell, FHI. It seems that the longtermists had a problematically low p(doom) for the world of FHI ending. Fortunately, they’re going to save us from machine superintelligence and all the other advanced technologies that they simultaneously believe we must eventually build because these technologies are necessary to realize their version of “utopia.”

For more on the TESCREAL bundle of ideologies, see my recent paper with Dr. Gebru in First Monday: https://firstmonday.org/ojs/index.php/fm/article/view/13636.

Thanks to Keira Havens for insightful comments on an earlier draft.

Have TESCREAList's considered that if they desire a long term future for "the light of human consciousness", however they define "human," then eliminating themselves might be the most effectively altruistic course of action they could take? In the back of my mind I hear a 1960's Stark Trek killer robot self-destructing: Error, Error, Error....

On the subject of "dangerous animals" and quantified suffering, I once met a grizzly bear and cub in the wild who were far more "happy" than a William MacAskill will ever give them credit for. How could I know that a TESCREAL skeptic might ask? Because I'm alive, and everything about them expressed that contentment implicitly.