What Is the "TESCREAL Bundle"? Answering Some Frequently Asked Questions

The TESCREAL concept is essential for understanding the race to build AGI. Here's why.

This document was written by Émile P. Torres. Note that my coauthor, Dr. Timnit Gebru, does not necessarily (and in some cases does not) endorse everything I write below. This is primarily my own take on the topic.

TABLE OF CONTENTS:

2. Who coined the acronym “TESCREAL”?

3. Can you give some examples of TESCREALists?

4. Why is the TESCREAL concept important? How does it connect to the AGI race?

6. I am confused about cosmism. Why include it in the acronym?

7. What is AI accelerationism and how does it fit into the TESCREAL framework?

8. Is TESCREALism a “conspiracy theory”? No.

9. What does TESCREALism have to do with eugenics?

10. Is TESCREALism a religion? Is it a cult?

12. What is an “existential risk” and how does it relate to the TESCREAL bundle?

13. Do TESCREALists advocate for the extinction of humanity?

14. What can be done to counteract the influence of TESCREALism?

1. What does “TESCREAL” mean?

The acronym “TESCREAL” stands for seven interconnected and overlapping ideologies: Transhumanism, Extropianism, Singularitarianism, modern Cosmism, Rationalism, Effective Altruism, and Longtermism.1

See the paper that I recently coauthored with Dr. Timnit Gebru for a detailed explanation of each ideology. A more accessible summary can be found in a Truthdig article of mine, here.

2. Who coined the acronym “TESCREAL”?

In late 2022, Dr. Timnit Gebru and I began to collaborate on a project to understand the origins of the current race to build artificial general intelligence, or AGI. How did this race take shape? What is driving it?

It wasn’t that long ago that many researchers in AI-related fields distanced themselves from the goal of building “machines performing the most advanced human thought activities,” partly “because they did not want to be associated with grandiose claims that researchers didn’t deliver on.” As Gebru and I write: “Such claims led to the ‘AI winters’ of the 1970s and 1990s, with much of the research focused on building ‘general intelligence’ losing funding. After that, the field was mostly focused on building specialized systems that some call ‘narrow AI.’”

So, what changed? How did AGI become the explicit goal of the biggest and best-funded AI companies in the world: DeepMind, OpenAI, Anthropic, and xAI?

As our project moved forward, it became clear that we cannot give a complete explanation of the current race to build AGI without reference to seven ideologies: the TESCREAL ideologies. The values, futurological visions, and tech-world influence of these ideologies are absolutely integral to understanding the AGI race. Because many leading figures with respect to AGI are affiliated with multiple ideologies, Gebru and I found that mentioning each ideology separately was becoming unmanageable. Consequently, I proposed the acronym “TESCREAL” to streamline our discussion. (This has the virtue of listing these ideologies in approximately the same order that they emerged historically.)

We then started using the terms “TESCREAList” to refer to any individual associated with the techno-utopian vision that lies at the heart of the TESCREAL bundle, and “TESCREALism” to refer (roughly) to this techno-utopian vision. According to this vision, advanced technologies will soon enable us to create a utopian world, whereby we will reengineer humanity, overcome scarcity, colonize space, and ultimately establish a sprawling multi-galactic civilization. We may even upload our minds to computers, become immortal, and completely abolish suffering.

For descriptions of what this techno-utopian future offers, see Marc Andreessen’s “Why AI Will Save the World” and “The Techno-Optimist Manifesto,” Nick Bostrom’s “Letter from Utopia,” and chapter 8 of Toby Ord’s book The Precipice. See also these two articles of mine (here and here) for further discussion.

3. Can you give some examples of TESCREALists?

Yes. Here are a few examples:

Nick Bostrom has been one of the most influential figures within the TESCREAL movement. A self-described “polymath,” he has promoted the idea that advanced technologies—especially AGI—could bring about a utopian future in which we become “semi-mortal uploaded creatures with Jupiter-sized minds.” In his 2020 “Letter from Utopia,” he writes that our superintelligent posthuman descendants will have such “immense silos of [pleasure] in Utopia” that they will “sprinkle it in [their] tea.” He calls the project of creating this utopia “paradise-engineering.”

Bostrom is arguably the most prominent transhumanist in the world. During the 1990s, he participated in the Extropian movement. It was on the Extropian mailing list that he sent is now-infamous email in which he said that “Blacks are more stupid than whites” and then wrote the N-word. A good deal of his work since then has focused on the singularitarian notion of an “intelligence explosion” triggered by recursively self-improving AI. This is one of the main definitions of “the Singularity.” His 2014 book Superintelligence examines what could go wrong with the Singularity, while his 2024 book Deep Utopia looks at how AGI could bring about what he calls a “solved world.”2

Bostrom also has extensive connections with the Rationalist and Effective Altruist (EA) communities. He has been active on the Rationalist website LessWrong since at least 2007, and Eliezer Yudkowsky, who founded Rationalism, is a close collaborator.3 The Rationalist community tends to see Bostrom as one of the movement’s most important figures. Another close collaborator of Bostrom’s is Toby Ord, who cofounded the EA movement with William MacAskill in 2009. Both Ord and MacAskill have held positions at Bostrom’s now-defunct Future of Humanity Institute; Ord joined the team as a Research Associate in early 2007, before EA existed. Prior to being shut down, the Future of Humanity Institute shared office space with the leading EA organization, the Centre for Effective Altruism,4 and in 2015 Bostrom presented his research at the leading EA conference, EA Global.

Finally, Bostrom cofounded the longtermist ideology with an EA named Nick Beckstead.5 Longtermism is one of the main “cause areas” within the EA community, and both Ord and MacAskill are longtermists.

Ben Goertzel, like Bostrom, is a transhumanist who participated in the Extropian movement. He has written frequently about the Singularity, is the founder and CEO of SingularityNET.io, and has given talks about AI at the Singularity Summit, which was founded by Peter Thiel and two singularitarians: Yudkowsky and Ray Kurzweil. Goertzel also “founded the OpenCog open-source AGI software project,” was Chairman of the Artificial General Intelligence Society, held the position of Vice Chairman at Humanity+, an organization originally cofounded by Bostrom as the World Transhumanist Association, and participated in the early Rationalist community.

In his 2010 A Cosmist Manifesto, Goertzel introduced modern cosmism, which imagines a techno-utopian future among the stars that is almost indistinguishable from the vision of longtermism. He writes that “we will spread to the stars and roam the universe,” create “synthetic realities” (virtual-reality worlds), and “develop spacetime engineering and scientific ‘future magic’ much beyond our current understanding and imagination.” (See question #6 for further discussion.)

Eliezer Yudkowsky is a prominent transhumanist who also participated in the Extropian movement. A self-described “genius,” he started off as a singularitarian who believed that we should create AGI as soon as possible; in other words, he was an “AI accelerationist.” He has since become the most famous “AI doomer” and helped popularize the term “p(doom),” an expression of one’s credence that AGI will cause our extinction rather than bring about utopia.

Around 2009, Yudkowsky launched LessWrong as a community blogging website. It was out of this website that the Rationalist movement emerged. He has been very influential among EAs,6 and has been a contributor to the Effective Altruism Forum, the main online hub of EA, since 2007.7 His techno-utopian vision of, in his words, our “glorious transhuman future” among the stars is virtually identical to the visions of cosmism and longtermism.8

In addition to his collaborations with Bostrom, Yudkowsky previously hired Goertzel as the Director of Research at the Singularity Institute for Artificial Intelligence, which he founded in 2000. In 2013, it was renamed the Machine Intelligence Research Institute (MIRI).9 MIRI has been funded by Peter Thiel (the keynote speaker at the 2013 Effective Altruism Summit), Vitalik Buterin (a recipient of the Thiel Fellowship), and Jaan Tallinn (the almost-billionaire cofounder of Skype), along with TESCREAL organizations like Open Philanthropy, the Berkeley Existential Risk Initiative, and the Future of Life Institute. The Future of Life Institute includes Bostrom and Elon Musk on its advisory board and was cofounded by Max Tegmark and Jaan Tallinn, among others. Both Tegmark and Tallinn delivered talks at Yudkowsky’s Singularity Summit (here and here), as well as EA Global (here and here).10

Sam Altman, according to a New York Times profile, is “the product of a strange, sprawling online community that began to worry, around the same time Mr. Altman came to the Valley, that artificial intelligence would one day destroy the world. Called rationalists or effective altruists, members of this movement were instrumental in the creation of OpenAI.” Another profile affirms that Altman “embraced the techy-catnip utilitarian philosophy of effective altruism.”

Altman is also a transhumanist who believes that our brains will be digitized in the near future (within our lifetimes), and was one of 25 people to sign up with Nectome, a startup offering to preserve people’s brains so they can be uploaded to computers. He says that Yudkowsky is the reason that he and many others became interested in AGI, and agrees with Yudkowsky that “future galaxies are indeed at risk” when it comes to getting AGI right. That is to say, concurring with many EA-longtermists, he believes that if we create a “magic intelligence in the sky” (his phrase) that is properly “aligned” with the techno-utopian vision of TESCREALism, then we will get to colonize the universe and reengineer galaxies. But if we create a “value-misaligned” AGI, the result could be “lights out for all of us.” He says that “I do not believe we can colonize space without AGI.”

Elon Musk says that longtermism “is a close match for my philosophy.” In 2022, he retweeted a link to a paper written by Bostrom titled “Astronomical Waste,” which included the line “likely the most important paper ever written.” This paper made the case that we should try to spread into space as soon as possible. Once there, we should build “planet-sized” computers that run virtual reality worlds full of trillions and trillions of digital people. Musk believes that we may be on the verge of triggering the Singularity, and his company Neuralink aims to fulfill a key part of the transhumanist project: merging our brains with AI, thereby jumpstarting “the next stage of human evolution,” namely, “transhuman evolution.”

Echoing singularitarians like Ray Kurzweil and longtermists like Bostrom, Toby Ord, and William MacAskill, he writes that “we have a duty to maintain the light of consciousness, to make sure it continues into the future” and that “what matters ... is maximizing cumulative civilizational net happiness over time.” According to Vice, “Musk was going to tweet about ‘Rococo’s Basilisk’ … when he discovered that Grimes had made the same joke three years earlier and [so he] reached out to her about it.” “Rococo’s Basilisk” is a reference to “Roko’s basilisk,” a bizarre idea that was first discussed on Yudkowsky’s website LessWrong. It was, in other words, through the Rationalist community that Musk and Grimes met.

These are just a few examples. In addition to Bostrom, Goertzel, Yudkowsky, Altman, Musk, Kurzweil, Ord, Beckstead, and MacAskill, other people who I would classify as TESCREALists—or describe as having a very close connection to the TESCREAL movement—are Shane Legg, Demis Hassabis, Anders Sandberg, Jaan Tallinn, Paul Christiano, Dustin Moskovitz, Vitalik Buterin, Larry Page, Guillaume Verdon, Marc Andreessen, Leopold Aschenbrenner, Elise Bohan, Hilary Greaves, Rob Bensinger, Nate Soares, Eric Drexler,11 Robin Hanson, Max Tegmark, Daniel Kokotajlo, Liron Shapira, Max More, Connor Leahy, Scott Alexander, Dario Amodei, Daniella Amodei, Holden Karnofsky, Dan Hendrycks, Stuart Russell, Peter Thiel, and Sam Bankman-Fried, to name a few.12

Note: a comprehensive list of members of the TESCREAL movement can be found on a little-known website, here.13 An exhaustive catalogue of the incestuous funding connections between TESCREAL organizations and wealthy donors can be found here. The data contained on these websites further support a “strong” interpretation of the TESCREAL thesis that Gebru and I defend in our paper. See question #8 for explanation about the “weak” and “strong” interpretations of our thesis.

4. Why is the TESCREAL concept important? How does it connect to the AGI race?

The behaviors of companies in our capitalist economic system can, in most cases, be fully explained by the profit motive. Why are the fossil fuel companies ruining the planet? Why did they spread disinformation about climate change? Because their goal is to maximize profit; because their sole obligations are to their shareholders. I would argue that the leading AI companies—DeepMind, OpenAI, Anthropic, and xAI—are different. They constitute anomalies. Why? Because the profit motive is only half of the explanatory picture.

It is true that Google (which acquired DeepMind in 2014), Microsoft (which has invested $13 billion in OpenAI), and Amazon (which has invested $4 billion in Anthropic) expect to make billions of dollars in profits from the AI systems that these companies are building.

But the decision to pursue AGI in the first place cannot be fully explained by the profit motive. The other half of the picture thus concerns the TESCREAL bundle of ideologies. It is these ideologies that account for the origins of the race to build AGI, and they continue to shape and drive this race up to the present. If not for the TESCREAL ideologies, almost no one would be talking about “AGI” right now.

To make sense of what’s going on with AGI, it is thus crucial to understand these ideologies. A complete explanation of the AGI race is impossible without references to both TESCREALism and the profit motive. Indeed, Gebru and I contend that all of the leading AI companies directly emerged out of the TESCREAL movement. Consider that:

DeepMind was cofounded by Shane Legg, Demis Hassabis, and Mustafa Suleyman. Legg is a transhumanist who has presented his research on AI at the Singularity Summit (founded by Yudkowsky, Ray Kurzweil, and Peter Thiel). He received $10,000 from the Canadian Singularity Institute for Artificial Intelligence for his 2008 PhD dissertation titled “Machine Super Intelligence,” and has exchanged emails on the SL4 mailing list, founded by Yudkowsky in 2001, which describes itself as “a refuge for discussion of advanced topics in transhumanism and the Singularity.” He also has had a profile on Yudkowsky’s website LessWrong since 2008, which he used to comment under LessWrong posts by Yudkowsky and others. In an interview with LessWrong from 2011, one year after he cofounded DeepMind, Legg expressed Yudkowsky’s view that AGI is the number one threat facing humanity this century, adding that there’s a 50% chance of human-level AI by 2028. Legg also believes that, if we get AGI right, the outcome could be “a golden age of humanity.” He says that he read Ray Kurzweil, the most prominent singularitarian, in the early 2000s and became convinced that Kurzweil was “fundamentally right” that computation would continue to grow exponentially.

Hassabis also presented his research at the Singularity Summit. It was after the 2010 Singularity Summit that he followed Peter Thiel back to Thiel’s mansion and asked him for money to start DeepMind. Thiel then invested $1.85 million into DeepMind, and when Google acquired the company in 2014, “Thiel’s venture capital firm, Founders Fund, owned more shares than all three of DeepMind’s co-founders.” Elon Musk was another investor in DeepMind, and Jaan Tallinn—one of the most prolific financial supporters of the TESCREAL movement—“was an early financial supporter and board member.”

In 2011, after DeepMind was founded, Hassabis gave a talk about AI at Bostrom’s Future of Humanity Institute, and later included Bostrom as a member of DeepMind’s “Ethics and Society” team. Six years later, Hassabis joined Tallinn, Musk, Kurzweil, Bostrom, and Max Tegmark on stage at a conference on AI hosted by the Future of Life Institute, the TESCREAL organization cofounded by Tegmark, Tallinn, and others (which initially received $10 million in funding from Musk for “the world’s first academic grant program in AI safety,” and has more recently received $665 million from the crypto TESCREAList Vitalik Buterin). When Hassabis was asked whether we should build a superintelligent machine, despite the potential existential risks, he answered “yes.” Echoing other TESCREALists, he claims that AGI/superintelligence is “going to be the greatest thing ever to happen to humanity,” if we get it “right.”

Less is known about Suleyman’s views, though they appear consistent with the values and goals of TESCREALism. In 2023, he was interviewed on the 80,000 Hours podcast, hosted by an EA organization of the same name (80,000 Hours), which was cofounded by the TESCREAList William MacAskill. At the beginning of the interview, Suleyman states that “I’ve long been a fan of the podcast and the [EA] movement.” He later says that he considers himself, along with many other researchers at DeepMind and OpenAI, to be a part of the “AI safety” community. This community directly emerged out of the TESCREAL movement.14

From its beginning, DeepMind has been intimately bound-up with TESCREALism.

OpenAI was cofounded by, among others, Musk and Altman, whose ties to TESCREALism were briefly mentioned above. As the New York Times profile of Altman notes, Rationalism and EA-longtermism “were instrumental in the creation of OpenAI.” Altman credits Yudkowsky with inspiring him to pursue AGI (“he got many of us interested in AGI, helped DeepMind get funded at a time when AGI was extremely outside the Overton window, was critical in the decision to start OpenAI, etc.”15), and wrote on his personal blog the same year that OpenAI was founded (in 2015) that “Bostrom’s excellent book Superintelligence is the best thing I’ve seen on this topic. It is well worth a read.”

OpenAI started with $1 billion in funding from Musk, Thiel, and others, while “Tallinn offered to financially support OpenAI’s safety research and met regularly with [Dario] Amodei [a key figure in the direction of OpenAI] and others at the organization.” More recently, Andreessen Horowitz, cofounded by the TESCREAL accelerationist Marc Andreessen, was one of several venture capital firms that collectively invested more than $300 million into OpenAI. The TESCREAL organization Open Philanthropy has also given OpenAI $30 million. Some leading AI accelerationists have recently praised Altman as one of their own.

Anthropic was founded by seven people who previously worked for OpenAI, including the siblings Dario and Daniela Amodei. Both are EA-longtermists, and Dario was once roommates with Holden Karnofsky, a thought leader within the EA and longtermist movements. Daniela later married Karnofsky.16

Initial funding for Anthropic came from Jaan Tallinn ($124 million), and in 2022 Anthropic received $500 million from FTX, run by the TESCREAList Sam Bankman-Fried. As a New York Times article observes: “all of the major AI labs and safety research organizations contain some trace of effective altruism’s influence, and many count believers among their staff members. … No major AI lab embodies the EA ethos as fully as Anthropic. Many of the company’s early hires were effective altruists, and much of its start-up funding came from wealthy EA-affiliated tech executives,” including the TESCREAList Dustin Moskovitz, the cofounder of Facebook, Tallinn, and Bankman-Fried. Another article notes that “Luke Muehlhauser, Open Philanthropy’s senior program officer for AI governance and policy, is one of Anthropic’s four board members.” Prior to working at Open Philanthropy, Muehlhauser was the Executive Director of Yudkowsky’s Machine Intelligence Research Institute.

Finally, xAI was founded by Elon Musk as a competitor to these other companies. As noted, Musk is a transhumanist and longtermist whose views about AGI have been shaped by leading TESCREALists like Nick Bostrom. Musk hired Dan Hendrycks as an advisor. Hendrycks founded the Center for AI Safety, which received more than $5 million from Open Philanthropy and released a statement about the dangers of AGI that was signed by Altman, Dario Amodei, Shane Legg, Demis Hassabis, Mustafa Suleyman, Ray Kurzweil, Jaan Tallinn, Vitalik Buterin, Dustin Moskovitz, Max Tegmark, and Grimes, among others. A post that Hendrycks coauthored on the Effective Altruism Forum states that he “was advised … to get into AI to reduce [existential risks from AGI], and so settled on this rather than proprietary trading for earning to give.” The idea of “earning to give” was developed by EAs, and is what inspired Bankman-Fried to pursue his career in crypto.

This hardly scratches the surface of the dense, incestuous connections between leading figures within the TESCREAL movement and the leading AI companies.

Note that Gebru and I are not claiming that everyone working for these companies is a TESCREAList, only that (a) the origins of these companies lie in the TESCREAL movement, and (b) the philosophies that motivate the pursuit of AGI have been deeply influenced by TESCREALism. As we write in our paper: “Many people working on AGI may be unaware of their proximity to TESCREAL views and communities. Our argument is that the TESCREAList ideologies drive the AGI race even though not everyone associated with the goal of building AGI subscribes to these worldviews.”

To repeat: I would argue that, if not for the TESCREAL ideologies, there almost certainly wouldn’t be a race to build AGI right now, fueled by hundreds of millions of dollars from wealthy TESCREAL donors and inspired by TESCREAL visions of a posthuman techno-utopia among the stars enabled by “value-aligned” AGI.

5. I am an Effective Altruist, but I don’t identify with the TESCREAL movement. Are you saying that all EAs are TESCREALists?

This is related to the last paragraph above. I wouldn’t say—nor have I ever claimed—that everyone who identifies with one or more letters in the TESCREAL acronym should be classified as “TESCREALists.” The movement that flows through these ideologies, and which has shaped and driven the AGI race, is built around a techno-utopian vision of the future that is broadly libertarian in flavor. It is this movement that we aim to pick out with the acronym “TESCREAL.” There are some members of the EA community who do not care about AGI or longtermism; their focus is entirely on alleviating global poverty or improving animal welfare. In my view, such individuals would not count as TESCREALists. When I talk about “EA,” I am primarily talking about “EA-longtermism”—that is, the longtermist branch of EA, founded by Nick Bostrom and Nick Beckstead and developed by Toby Ord, William MacAskill, Holden Karnofsky, and others.

Similarly, there are democratic-socialist, Christian, and Mormon transhumanists who I would not count as TESCREALists. The movement that interests me corresponds, in part, to what Jaan Tallinn calls the “AI safety and x-risk ecosystem” (where “x-risk” stands for “existential risk”). A more “accelerationist” camp, with less emphasis on “AI safety” and “existential risks,” has recently emerged. This camp has close similarities to the early TESCREAL movement, as many Extropians and singularitarians in the 1990s and early 2000s believed that the risks of emerging technologies are relatively low, the benefits of these technologies outweigh the risks, and free-markets are the best way to solve whatever risks they might introduce. For discussion of the intimate connections between accelerationism (or e/acc) and TESCREALism, see this article of mine and question #7 below.

In 2022, the transhumanist, EA, and longtermist Elise Bohan published a book titled Future Superhuman. Though she does not use the term “TESCREAL,” as it had not yet been coined, it offers a compelling account of the TESCREAL movement, showing how these ideologies are interconnected and how the communities corresponding to each have overlapped. I would thus recommend it as a book-length treatment of the TESCREAL bundle.

6. I am confused about cosmism. Wasn’t that a 19th-century movement in Russia? Are you saying that Russian cosmism is popular in Silicon Valley? Why include it in the acronym?

Russian cosmism could be seen as an early version of transhumanism, but this is not what we are referring to in the acronym. Rather, we are referring to modern cosmism. As noted earlier, this was outlined by Ben Goertzel in his 2010 book A Cosmist Manifesto, and its vision of the future is nearly identical to that of longtermism (and AI accelerationism, or e/acc). For Goertzel, a former Extropian who has “been heavily involved in the formation and growth” of the AGI field, cosmism is a more ambitious kind of transhumanism that aims to reengineer not just humanity but the entire universe. See the section on cosmism in our paper.

The reason we included cosmism in the acronym goes back to question #2 above: one cannot give a complete explanation of the origins of the AGI race without some reference to cosmism. Why? Because the techno-utopianism that Goertzel developed into his cosmist worldview is what motivated his own research endeavors within the field of AI, and his work on AI is why we have the term “AGI” today. More specifically, Goertzel and a colleague decided to publish an edited collection of essays in 2007. Yudkowsky was one of the contributors. The working title of the book was Real AI, but Goertzel was unhappy with this. So he asked around for a better term than “real AI.” One of the people who responded to him was a former employee of his, Shane Legg, who suggested the term “artificial general intelligence.” Goertzel then changed the title of his book to Artificial General Intelligence, and this is how the terms “artificial general intelligence” and “AGI” became part of our shared lexicon.

In other words, the central term of contemporary discussions about artificially intelligent systems can be traced back to an Extropian, transhumanist, and cosmist—Goertzel—who got the term from a transhumanist singularitarian—Legg—who subsequently cofounded DeepMind. If not for Goertzel’s belief that advanced AI will play an important role in enabling us to spread beyond Earth, reengineer galaxies, and engage in “spacetime engineering” using “scientific future magic,” we probably wouldn’t be referring to “AGI” right now as “AGI.”17

7. You mentioned “AI accelerationism” above. I have also heard about “effective accelerationism,” or “e/acc.” What is this, and how does it fit into the TESCREAL framework?

I provide a detailed answer to this question in one of my articles, here. In brief, accelerationism should be understood as a variant of TESCREALism. The disagreement between accelerationists and doomers isn’t about what the future should look like. All parties imagine technology enabling us to reengineer humanity, colonize space, and build a giant multi-galactic civilization at the top of the Kardashev scale. The disagreement comes down almost entirely to the perceived risks of AGI: accelerationists think the probability of doom is low, while doomers think the probability is high. This should be understood as a family dispute.

8. Is TESCREALism a “conspiracy theory”?

This claim comes from a self-published Medium article by Dr. James Hughes and Eli Sennesh, titled “Conspiracy Theories, Left Futurism, and the Attack on TESCREAL.” It is worth noting that this article was published on June 12, 2023, before Gebru or I had published a single article about the TESCREAL concept, or had discussed the idea on any podcasts. It was based on nothing more than a few tweets from Gebru and I, and an article in The Washington Spectator that Gebru and I had nothing to do with.18 This would be like Gebru and I writing a harsh review of James Hughes’ forthcoming book without having read the book beforehand.19 Hughes and Sennesh accuse us of a “sloppy approach to intellectual history,” but is there anything sloppier than confidently declaring an idea to be a “conspiracy theory” without knowing anything about what that idea is? Without having read a single article, either academic or in the popular media, about that idea? These questions are rhetorical.

TESCREALism is not a conspiracy theory, defined as “a belief that some secret but influential organization is responsible for an event or phenomenon.” We do not claim that the influence of the TESCREAL ideologies on the AGI race is secret (it’s obviously not), and the evidence for these ideologies being influential is overwhelming. Naomi Klein has a useful discussion of conspiracies in chapter 11 of her book Doppelganger, where she points out that something does not constitute a “conspiracy theory” if the balance of evidence unambiguously supports it.20

Here it may be useful to distinguish between two interpretations of the TESCREAL thesis. We can call these the weak and strong theses. The weak thesis merely states that the race to build AGI has been influenced by the TESCREAL ideologies. That is unarguably true. One cannot talk about the origins of this race, or the beliefs and values that have shaped and driven it, without some reference to the seven ideologies in the TESCREAL acronym.

The strong thesis subsumes the weak thesis, but goes beyond it in claiming that the TESCREAL ideologies should be conceptualized as comprising a single, cohesive bundle. This is what Gebru and I defend in our paper, and we believe the evidence here is also overwhelming. One can group this evidence into three categories: historical, sociological, and ideological.

Historically, these ideologies grew out of each other. Extropianism was the first organized modern transhumanist movement, and gave rise to other forms of transhumanism, namely, singularitarianism and cosmism. Hence, the first four letters in the acronym—“TESC”—are variants of the same thing.

Rationalism was founded in the late 2000s by Yudkowsky, a transhumanist Extropian singularitarian who hired Goertzel, the transhumanist Extropian who founded cosmism, as Director of Research at his Singularity Institute for Artificial Intelligence, later rebranded the Machine Intelligence Research Institute.

EA partly emerged out of the transhumanist movement and was partly developed within the Rationalist blogosphere.21 EA was cofounded by Toby Ord, William MacAskill, and some others. Ord has been collaborating with Bostrom since at least 2004. Two years later, he coauthored a paper with Bostrom defending transhumanism, and in 2007—two years before EA officially started—he joined Bostrom’s transhumanist organization, the Future of Humanity Institute. The EA philosophy was fleshed out via discussions on Rationalist blogs like LessWrong and Overcoming Bias, which was initially run by Yudkowsky and Robin Hanson (a transhumanist Extropian longtermist22) until Yudkowsky moved to LessWrong.23 EA and Rationalism are best understood as siblings, with one focusing on ethics and the other focusing on rationality.

Longtermism was cofounded by Bostrom, a transhumanist Extropian singularitarian with extensive links to EA and Rationalism, along with an early member of the EA community named Nick Beckstead.24 In 2011, Beckstead began to post on the Rationalist LessWrong website. The same year, he submitted an article on (what would later be called) “longtermism” to a writing contest hosted by the Future of Humanity Institute, and won. This article later became his PhD dissertation, one of the founding documents of longtermism. Although the word “longtermism” wasn’t coined until 2017, the EA community was, from the start, deeply interested in Bostrom’s idea that there could be trillions of future posthumans living in computer simulations spread across galaxies, and that superintelligent machines could determine the entire future of the cosmos (our “future light cone”). As Mollie Gleiberman observes, “starting from its inception, the EA movement quietly and consistently advocated for what is now being promoted as ‘longtermism’—a rebranding of the AI-safety/x-risk agenda developed by transhumanists [and related communities] in the early 2000s.”

One can thus imagine these ideologies as a kind of suburban sprawl, resulting in a conurbation of municipalities that share much of the same ideological and sociological real estate. I have argued before that transhumanism can be seen as the “backbone” of the TESCREAL bundle, since every other ideology traces its origins back to this movement, either in part or in whole.

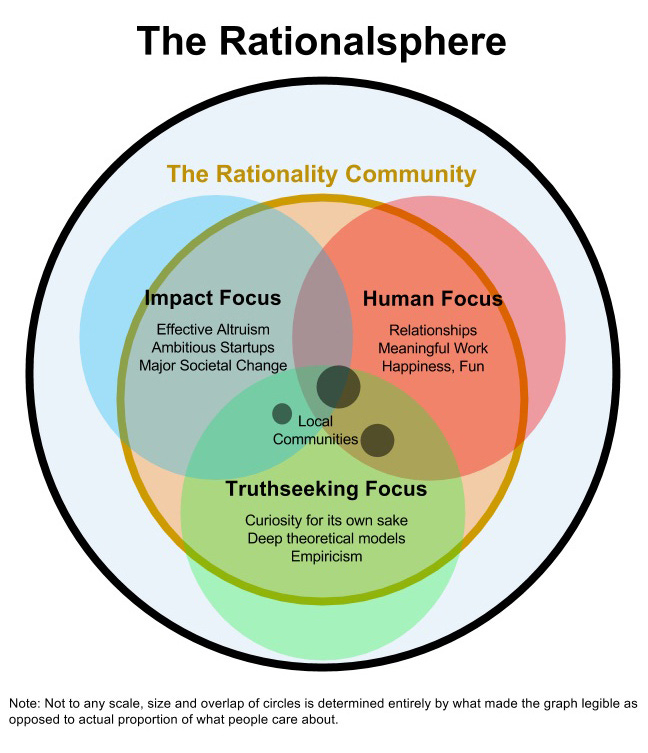

Sociologically, the communities that have coalesced around each ideology have overlapped considerably, as implied above. Other examples are noted in section 3. Many singularitarians were Extropian transhumanists; many Rationalists are transhumanists, EAs, and longtermists; and so on. There are some distinctions between these ideologies, and hence their respective communities, but they are minor. If one were to locate these communities on an ideological map, they would initially appear to occupy the same place. If one zoomed in far enough, one would find that they are not completely coextensive, but overlap considerably, as shown in previous sections.

Ideologically, these communities form something of an “epistemic community.” They share the same value commitments, intellectual tendencies, and visions of the future. They emphasize a particular conception of “rationality,” employ “expected value theory” for decision-making (including ethical decision-making), lean toward utilitarian ethics,25 highly value “intelligence” and IQ, use a similar idiosyncratic vocabulary (e.g., “updating” to mean modifying their beliefs based on new evidence; “p(doom)” to mean their estimate that AGI kills us), embrace a linear-progressivist conception of history (i.e., according to which we began in a “primitive” state, progressed through agriculture, and reached the pinnacle of human development with industrialization26), are broadly libertarian and generally sympathetic to neoliberalism, claim to eschew politics (which Yudkowsky calls the “mind-killer”), prefer a quantitative/engineering approach to solving all problems, and believe that advanced technologies—especially AGI—will enable us to create a techno-utopian world marked by radical abundance, immortality, space colonization, and “astronomical” amounts of “value” (that is, if AGI doesn’t destroy us).

These are some of the common denominators that bind together transhumanism, Extropianism, singularitarianism, cosmism, Rationalism, and EA-longtermism. When paired with the historical and sociological considerations above, the case for conceptualizing the TESCREAL bundle as a bundle appears very strong. As mentioned under question #5, I am not saying that everyone who identifies with one of these ideologies is a TESCREAList. Rather, TESCREALism is that part of these communities that exemplifies the above features and has played an integral role in shaping and driving the AGI race.

This is the strong thesis, and we believe that the evidence overwhelmingly supports it.

9. What does TESCREALism have to do with eugenics?

As noted earlier, transhumanism is the “backbone” of the TESCREAL bundle. It is also a form of eugenics—philosophers sometimes call it “liberal eugenics” or “neo-eugenics.” This is uncontroversial. Since the “ESC” ideologies in “TESCREAL” are just versions of transhumanism, and since the “REAL” ideologies all emerged, either partially or entirely, out of the transhumanist movement, the TESCREAL bundle as a whole is intimately linked to eugenics. Advocates of the TESCREAL ideologies might not like to acknowledge this fact, but reality is what it is.

Historically, transhumanism was developed by 20th-century eugenicists like Julian Huxley, JBS Haldane, and JD Bernal. I have described transhumanism as “eugenics on steroids” in the following sense: whereas the 20th-century eugenicists wanted to use science and rationality to improve the “human stock,” thus making us the most “perfect” version of our species possible while preventing our species from “degenerating,” transhumanists go beyond this in saying: “Why stop at improving the ‘human stock’? Why not use science and rationality to create an entirely new ‘posthuman stock’—a novel species of cyborgs or completely artificial beings that are superior to us?”

As Julian Huxley wrote: “The human species can, if it wishes, transcend itself—not just sporadically ... but in its entirety, as humanity.” He adds that if enough people “can truly say ... ‘I believe in transhumanism,’ [then] the human species will be on the threshold of a new kind of existence, as different from ours as ours is from that of [Peking] man. It will at last be consciously fulfilling its real destiny.” This idea of our “destiny” in the universe, of “fulfilling” our “longterm potential,” is everywhere in the TESCREAL literature.27

So, the backbone of TESCREALism is a version of eugenics that was developed during the 20th century by leading eugenicists. Unsurprisingly, then, eugenic ideas are widespread within the TESCREAL movement. I have written about this fact at length in this article; here is a very brief survey. Consider that Nick Bostrom identifies one type of “existential risk” to be “dysgenic pressures.” The word “dysgenic” was used frequently by 20th-century eugenicists to denote scenarios in which the less “fit” members of society have more children and, by doing so, pass their “unfit” genes on to the next generation. This causes the overall “genetic fitness” of the human population to decline. Bostrom writes:

It is possible that advanced civilized society is dependent on there being a sufficiently large fraction of intellectually talented individuals. Currently it seems that there is a negative correlation in some places between intellectual achievement and fertility. If such selection were to operate over a long period of time, we might evolve into a less brainy but more fertile species, homo philoprogenitus (“lover of many offspring”).

Bostrom then reassures us that we will probably develop genetic engineering techniques that enable us to create designer babies with exceptionally high “intelligence” before dysgenic pressures can turn our species into Homo philoprogenitus. In other words, short-term eugenics will save us from long-term dysgenics.

Elsewhere, Bostrom writes that “racism, sexism, speciesism, belligerent nationalism, and religious intolerance are unacceptable” within the transhumanist community. Yet, just a few years prior, Bostrom sent an email to the Extropian mailing list in which he said that “Blacks are more stupid than whites. I like that sentence and think it is true,” after which he wrote the N-word.

In fact, the very same discriminatory attitudes that animated 20th-century eugenics—racism, sexism, ableism, classism, xenophobia, elitism, and so on—are pervasive within the TESCREAL movement. For example, some people have defended Bostrom’s suggestion that some “racial” groups may be more “intelligent” than others.28 Leading figures like Bostrom and Anders Sandberg have approvingly cited the work of Charles Murray, famous for his scientific racism, and the TESCREAL community highly values “IQ” as a measure of “intelligence,” where IQ tests were developed in part by 20th-century eugenicists who used them to justify racist policies (e.g., anti-miscegenation and anti-immigration laws). Yudkowsky has boasted on numerous occasions that his IQ is 143.

Furthermore, critics have rightly accused Bostrom of displaying an egregious “class snobbery” in his writings about transhumanism, and the prominent EA Peter Singer has argued that some infants with disabilities should be killed, in part because of the burden they place on society. (The idea that eugenic considerations justify infanticide goes back to ancient Roman law.29) People on the Extropian mailing list even worried in the mid-1990s that “since we as transhumans are seeking to attain the next level of human evolution, we run serious risks in having our ideas and programs branded by the popular media as neo-eugenics, racist, neo-nazi, etc.”

Finally, numerous articles about “AGI” and superintelligence have relied on explicitly racist definitions of “intelligence.” This led my colleague Keira Havens to ask: “Why are you relying on eugenic definitions, eugenic concepts, eugenic thinking to inform your work? Why, as you build the systems of the future, do you want to enshrine these static and limited ways of thinking about humanity and intelligence?”

Once you start looking around the TESCREAL neighborhood for examples of eugenics, you find them everywhere. Two French scholars introduced a phrase—the “eternal return of eugenics”—because eugenics has appeared over and over again throughout Western history, in one form or another. TESCREALism is just the most recent iteration of this eternal return. Eugenics is alive and well, and is a key component in the worldview that’s driving the race to build AGI.

10. Is TESCREALism a religion? Is it a cult?

People sometimes describe something as a “religion” to discredit it. But there is a good case to make that TESCREALism should be understood as a type of religion. Consider that transhumanism was explicitly developed by 20th-century eugenicists, such as Julian Huxley, as a secular replacement for traditional religion, which declined significantly in the Western world (especially among the educated classes) during the 19th century. Though he didn’t use the word “transhumanism” until the 1950s, Huxley explored the idea of transhumanism in his 1927 book titled Religion Without Revelation. In other words, transhumanism was to be a new religion based not on faith and revelation, but science and rationality.30 The roots of the TESCREAL bundle are thus deeply religious.

There are many parallels between traditional religion and the TESCREAL worldview. As with Christianity, TESCREALism promises a future utopia in which we abolish suffering and gain immortality. Instead of relying on supernatural agency to bring about utopia, the TESCREAL ideologies delegate this task to us: through our own ingenuity, we can “engineer paradise” (their term) right here and now, in this life rather than the afterlife, in this world rather than the otherworld. This confers a profound sense of meaning, purpose, and hope in the lives of TESCREALists, as they see themselves as contributing to a grand(iose) project that is much larger than any one person. As with Christianity, eschatology plays a central role within the TESCREAL belief system.

There are also articles of faith among TESCREALists: faith that technology will solve all of our problems; faith in “progress” driven by science and technology; faith that AGI will deliver us to a new world of plenty—the Promised Land, as it were. One way to understand the role of AGI within this secular religion is as follows: since God does not exist, why not just create him? This is why many people in and around this community have described AGI as “God-like AI.” A TESCREAList named Connor Leahy recently referred to building a “safe” AGI—meaning an AGI that helps us create utopia rather than destroying us—as “divinity engineering.”

On the flip side, as with traditional religions like Christianity, there is also an apocalyptic dimension to these eschatological fantasies. This is why Musk has said that, with AGI, we are “summoning the demon.” In other words, if we build God in the form of AGI and it loves its parents—that is, us—then we will get eternal life in paradise. But if we build an AGI that does not care about us, it will be a “demon” that annihilates our species—the secular apocalypse. In traditional religion, the apocalypse is something that humanity must pass through to get to utopia. In the religion of TESCREALism, it is the alternative to paradise: we will either get paradise or annihilation, one or the other, and which we end up with will depend on whether AGI is a benevolent God or an annihilatory demon.31

TESCREALism even has its own version of resurrection: if advocates don’t live long enough to live forever (as Ray Kurzweil puts it), they can have their bodies, or just heads and necks, cryogenically frozen. Once the technology becomes available, their bodies will then be thawed and reanimated, at which point they will get to live forever. Many TESCREALists have signed up for this immortality service with cryonics companies, including Max More, Eliezer Yudkowsky, Nick Bostrom, Anders Sandberg, Ben Goertzel, Ray Kurzweil, Robin Hanson, James Miller, and Peter Thiel. As mentioned earlier, Sam Altman has arranged for his brain to be preserved by Nectome so that it can later be digitized, thus granting him “digital immortality” or “cyberimmortality.”

Finally, the TESCREAL movement is imbued with a certain kind of messianism. Many explicitly see their mission as “saving the world,” and hence believe that they are engaged in quite literally the most important project ever: to create “safe” AGI and mitigate “existential risk.” This project may even have cosmic significance, since if we build a “safe” or “value-aligned” AGI, it will enable us to create a flourishing utopian world among the stars full of astronomical amounts of value. But if we build an AGI with “misaligned values,” it will destroy us and, consequently, we will forever lose this “vast and glorious” future civilization spread across the universe. The stakes are enormous. The entire future of the universe may depend on what we do right now, in the coming years, with AGI. This conviction yields a powerful and intoxicating sense of self-importance.

Beyond this, there are strong reasons for seeing particular communities, such as EA and Rationalism, as “cults.” For example, these communities are very intolerant of critics who criticize the core ideology, and EAs who criticize core aspects of the movement often feel compelled to hide their identity for fear of being socially ostracized, denied funding, excluded from EA spaces, and so on. In some cases, former EAs have been threatened with physical violence for criticizing the movement.

EA also strongly emphasizes the importance of evangelizing for the ideology, which it calls “movement building,” and often targets young people on college campuses. People who join EA, which the EA community refers to as “converts,” sometimes live together and often date each other, as EA has its own dating websites. Polycules are not uncommon (nor is sexual harassment, it turns out). Members are encouraged to seek career advice from EA organizations like 80,000 Hours, and to donate only to EA-approved charities.32 They are told to donate at least 10% of their income, as with tithing in Christianity. Some of this money is funneled back into the community, to bolster “movement building.”

The community is very hierarchical, and those seen as having superior “intelligence” or “IQs”—the priestly class, as it were—are worshiped like heroes by the flock. Some members refer to the structure of the community as an “epistocracy,” whereby the “smartest” people occupy the highest echelons while the less “smart” people occupy the bottom rungs.

While preaching that members should give away most of their money to EA-approved charities, and often boasting about their altruistic sacrifices, EA leaders have engaged in luxurious spending. Effective Ventures, which houses MacAskill and Ord’s Centre for Effective Altruism, secretly bought a £15 million castle in Oxfordshire—sometimes dubbed the “Effective Altruism Castle”—and the most prominent EA, Sam Bankman-Fried, lived in a mansion in the Bahamas and owned $300 million in Bahamian real estate, which most people in the EA community didn’t know about because the leadership intentionally promoted myths about Bankman-Fried’s humble lifestyle. To celebrate the release of his 2020 book What We Owe the Future, MacAskill partied at an “ultra-luxurious vegan restaurant where the tasting menu runs $438 per person with tip, before tax.”

In 2023, it was reported that the main EA organization, cofounded by the TESCREALists William MacAskill and Toby Ord, secretly tested an internal ranking system of EAs, called “PELTIV” for “Potential Expected Long-Term Instrumental Value,” based in part on those members’ IQs. Those with IQs at or below 100 would have PELTIV points subtracted; those with IQs over 120 could have them added.

Some in the EA and Rationalist communities even practice what they call “Doom Circles,” whereby people form “a circle to say what’s wrong with each other.” As one article on the Effective Altruism Forum notes, before each person’s turn to be criticized, for the sake of improving themselves, a disclaimer is read:

We are gathered here this hour for the Doom of Steve [or whatever the EA-Rationalist’s name is]. Steve is a human being who wishes to improve, to be the best possible version of himself, and he has asked us to help him “see the truth” more clearly, to offer perspective where we might be able to see things he does not. The things we have to say may be painful, but it is a pain in the service of a Greater Good. We offer our truths out of love and respect, hoping to see Steve achieve his goals, and he may take our gifts or leave them, as he sees fit.

This looks a lot like a cult.33 The same could be said of other sub-communities within the TESCREAL movement, such as e/acc. Indeed, the leading e/acc Guillaume Verdon describes the movement that he cofounded as “a meta-religion.” When he was asked whether e/acc is a cult, he responded: “Define ‘cult,’” after which he admitted that “sometimes we do cult-like things.”

For these reasons, classifying the TESCREAL movement as a new religion is warranted by the evidence. That is not to denigrate religion, but to recognize the nature and potential dangers of this techno-utopian worldview that aims to build a “God” and then create a literal heaven in, well, the heavens.

11. Do people like Elon Musk actually believe in longtermism? Or are they just using it to justify their actions?

We cannot know what Musk actually believes, of course. He claims that longtermism is “a close match for my philosophy,” and has made other statements suggesting he’s a longtermist (see question #3). However, it is possible that tech billionaires like Musk came across longtermism and realized that it provides a superficially plausible moral excuse for what they want to do anyways: colonize space, merge our brains with AI, and so on, while ignoring the plight of the global poor.

Longtermism and the other TESCREAL ideologies naturally appeal to tech billionaires because these ideologies tell them exactly what they want to hear: not only are you excused from caring about “non-existential” risks like global poverty, but you are a morally better person for focusing on space colonization, jumpstarting the next stage of human evolution, etc. Imagine that Musk makes colonizing Mars possible. Since Mars is the stepping stone to the rest of the solar system, which is the stepping stone to the rest of the galaxy, which is the stepping stone to the rest of the universe, it could be that future digital posthumans in computer simulations spread throughout the cosmos look back on Musk as being quite possibly the most important human who ever lived. Musk is no doubt aware of this.

The same could be said of other tech billionaires like Sam Altman: he surely understands that if his company, OpenAI, creates a God-like AI that takes us to the heavens, he will have played one of the most important roles in cosmic history. The longtermist “ethic” (using that word loosely) would say that, were Musk or Altman to succeed, they will be among the most ethically important humans to have ever existed, according to the TESCREAL worldview.

It is worth distinguishing between two cases here: first, there are those who came across and embraced the TESCREAL ideologies after establishing their careers; and second, there are those who pursued certain careers in AGI, crypto, etc. because of their prior TESCREAL beliefs. It could be that Musk is an example of the first case, though it is clear that his current views on AGI, existential risks, and so on, have been shaped by Bostrom’s work over the years.

However, many other prominent figures within the AGI race seem to have been inspired to pursue AGI because of the TESCREAL bundle. I mentioned earlier that Altman was a product of the EA and Rationalist communities, and he credits Eliezer Yudkowsky with getting “many of us interested in AGI.” Dan Hendrycks, an advisor for xAI, says that EA-longtermist considerations led him to focus on AGI. Anthropic was started by EA-longtermists who worked for OpenAI but were dissatisfied with the “AI safety” culture at OpenAI (see footnote #12). And so on.

With respect to this second category, it is clear that these individuals genuinely believe in the TESCREAL view that AGI could bring about the Singularity, which could be the most important event not just in human history but in cosmic history, since AGI—if it is “safe” or “value-aligned”—will enable us to become immortal posthumans who fill the entire universe with the “light of consciousness.”34

12. What is an “existential risk” and how does it relate to the TESCREAL bundle?

The idea of an existential risk was introduced by Nick Bostrom in a 2002 paper published in the Journal of Evolution and Technology, previously named the Journal of Transhumanism.35 It was originally defined in explicitly transhumanist terms: the transition from a human to a posthuman world could fail in many ways because the very technologies needed to create a posthuman world will introduce unprecedented risks to humanity that could destroy us during this transitional phase.

Some at the time, like Bill Joy, the cofounder of Sun Microsystems, argued that these technologies are too dangerous to develop. Hence, we should ban their development. Transhumanists like Bostrom, Yudkowsky, Kurzweil, and others, strongly rejected this view. After all, if we fail to develop these technologies, then we will never be able to create techno-utopia. A failure to develop such technologies would itself be an existential catastrophe (the instantiation of an existential risk).

Their solution, contra Joy, was to create a new field of study that aims to understand and mitigate the risks of emerging technologies. By doing this, we can maximize our chances of creating techno-utopia while minimizing the probability of destroying ourselves in the process. This is how “Existential Risk Studies” and the field of “AI safety” were born. An existential risk, then, is any event that would prevent us from creating a stable, flourishing posthuman civilization.

The following year, in 2003, Bostrom published his paper “Astronomical Waste,” which expanded the definition of an “existential risk.”36 This paper argued that we should try to colonize space as soon as possible. As we spread throughout the universe, we should build “planet-sized” computers that run virtual reality worlds full of trillions and trillions of “happy” digital people, resulting in “astronomical” amounts of future “value.” (This is the paper that Elon Musk retweeted in 2022 with the line, “Likely the most important paper ever written.”) An existential risk, on this account, is any event that would prevent us from realizing these astronomical amounts of future value by creating trillions of digital people living in utopia.

The idea of an existential risk is thus intimately tied to the TESCREAL worldview. This is why I have stated that I do not care about “existential risks”: I do not care about whether we colonize space, plunder the cosmos for its resources, create huge virtual reality worlds full of digital people, and ascend the Kardashev scale (a measure of how much energy civilizations use).37

I would go a step further and argue that avoiding an existential catastrophe would itself be catastrophic for most of humanity. Why? Because avoiding an existential catastrophe would entail, by definition, that we have realized this techno-utopian world, and realizing this future would almost certainly marginalize already-marginalized communities, if not eliminate them entirely.

Even more, it would probably mean the marginalization or elimination of our species, Homo sapiens. In a world run by posthumans, would humanity—or what some TESCREALists disparagingly call “legacy humans”—have any place? No, not really. Though many TESCREALists claim to be strongly opposed to “human extinction,” they are in fact pro-human extinction. Beware of how TESCREALists redefine words like “human” to include our “posthuman” descendants, such that, if our species were to be completely replaced by these posthumans later this century, “human extinction” on their definition would not have occurred. See this article of mine for further discussion.

What about the natural world, our fellow creatures here on Earth? In his 2022 book What We Owe the Future, William MacAskill writes that our systematic obliteration of the biosphere might actually be net positive. Why? Because, he argues, wild animals suffer, so the fewer wild animals there are, the less wild animal suffering there will be. Killing off species and destroying ecosystems may therefore be overall good. Hence, it’s not even clear that nonhuman animals would have any home in the techno-utopian world envisioned by TESCREALism.

The fact is that this vision of the future was crafted almost entirely by privileged white men at elite universities like Oxford and in Silicon Valley. Consequently, it reflects all of the prejudices, biases, and shortcomings of this highly privileged group. In many ways, the utopian fantasies of TESCREALism are just a radical extension of techno-capitalism. Bostrom even defined “existential risk” in 2013 as a failure to attain a stable state of “technological maturity,” whereby we gain total control over nature and maximize economic productivity. Gaining control over nature and maximizing productivity will optimally position us to colonize the universe and build giant computer simulations full of trillions and trillions of digital people—that is why “technological maturity” is important. This is nothing more than Baconianism (the aim of subduing or subjugating nature) and capitalism (maximizing economic productivity) on steroids. The same idea is popular among AI accelerationists, who want to bring about the “techno-capital Singularity.”

One of the most striking features of the TESCREAL literature is the complete absence of virtually any reference to what the future could or—more importantly—should look like from alternative perspectives, such as those of Indigenous cultures, Afrofuturism, feminism, Queerness, Disability, and various non-Western thought traditions. This absence is very significant because, as my colleague Monika Bielskyte likes to say, one must always design with rather than design for. You cannot design a future for all of humanity without including within the design process itself people who represent other cultures, traditions, philosophies, and perspectives.38

TESCREALists, in their hubris, claim to have special access to what humanity as a whole should want: a future that realizes, throughout the entire cosmos, the fever dreams of Baconianism and techno-capitalism. They then aim to impose this vision on all of humanity. How? In part by creating a God-like AGI system that helps us become posthuman, spread into space, and reengineer galaxies. (Recall here Sam Altman’s admission that “future galaxies are indeed at risk” when it comes to getting AGI right.)

This is tyranny. TESCREALists are trying to unilaterally make irreversible decisions that will affect not just every human alive today, but every being that exists in the future. The arrogance and elitism behind this anti-democratic approach to shaping our collective future should frighten everyone on Earth. If companies like OpenAI really cared about “benefiting all of humanity,” they would rush to build a coalition of diverse voices that represent a multiplicity of perspectives to determine what sort of future we ought to steer the ship of civilization toward. One cannot design for. One must always design with.

13. Do TESCREALists advocate for the extinction of humanity?

I address this question in a recent article for Truthdig, here. In short, the term “human extinction” could mean several different things. If we understand human extinction as a scenario in which our species dies out and we do not leave behind any successors—posthuman cyborgs, digital minds, intelligent machines, etc.—then TESCREALists are opposed to human extinction. But if we take “human extinction” to mean “the elimination of our species, Homo sapiens,” then most TESCREALists are either indifferent to human extinction or hope to actively bring it about. That is to say, if Homo sapiens were entirely replaced by a “superior” posthuman species—even if this were to happen in the coming decades, within our lifetimes—that would be fine. In general, TESCREALists do not care about the fate of our species: our species’ survival only matters insofar as it’s necessary to inaugurate the next stage of transhuman evolution.

Hence, as alluded to under question #12, a kind of “pro-extinctionism” is widespread within the TESCREAL movement. Don’t be fooled when TESCREALists say that “we must avoid human extinction at all costs.” They are not talking about Homo sapiens, but “human” in a much broader sense that includes our posthuman successors, which may be entirely postbiological and have little in common with the way we think, feel, and so on.

14. What can be done to counteract the influence of TESCREALism?

I do not have a good answer to this question at the moment. The TESCREAL movement is extremely powerful. It has billions and billions of dollars behind it, and is being promoted by some of the most powerful people in the world, such as Elon Musk. My best answer at the moment is: educate yourself and those around you about these ideologies. This is the obvious first step: to recognize and name the threat. As my research progresses, I hope to develop a more compelling answer to what the next steps ought to be.

Acknowledgements: Thanks to Remmelt Ellen and several others for insightful comments on a draft of this document. Their willingness to read and provide feedback on this document does not imply that they endorse its content. All remaining errors are my own.

This document will be updated when necessary. The present version is 1.0, written on April 26, 2024 by Dr. Émile P. Torres. To financially support my work, go here.

In my paper with Gebru, we capitalize some but not all of these ideologies depending on how advocates capitalize them. Extropianism, Rationalism, and Effective Altruism are typically capitalized, while transhumanism, singularitarianism, cosmism, and longtermism are typically not capitalized.

Somewhat amusingly, Bostrom thanks both ChatGPT-4 and Claude 2 in the Acknowledgments “for extensive comments on the manuscript.”

Note that “Rationalism,” as used by people like Yudkowsky, has nothing to do with the traditional philosophical position of “rationalism,” exemplified by the work of philosophers like Plato and Descartes.

In fact, as the Centre for Effective Altruism notes, “Trajan House, a newly established EA hub in Oxford, currently accommodates the Centre for Effective Altruism, the Future of Humanity Institute, the Global Priorities Institute, the Forethought Foundation, the Centre for the Governance of AI, the Global Challenges Project, and Our World in Data.”

That Bostrom and Beckstead laid the foundations of longtermism is widely acknowledged. As the leading EA-longtermist Toby Ord writes in his 2020 book about “existential risks,” the word “longtermism” “was coined by William MacAskill and myself. The ideas [of longtermism] build on those of our colleagues Nick Beckstead (2013) and Nick Bostrom (2002b, 2003).”

As one article on the EA Forum, which is actually critical of Yudkowsky, notes: “Eliezer is a hugely influential thinker, especially among effective altruists, who punch above their weight in terms of influence.”

In 2022, after the collapse of Bankman-Fried’s FTX, Yudkowsky argued in a popular post on the Effective Altruism Forum that no one who received money from FTX should feel obligated to return it. Take the money and run!

Though Yudkowsky has questioned the label “longtermism,” consider what the poster boy of longtermism, William MacAskill, writes in an article about the history of this label: “Up until recently, there was no name for the cluster of views that involved concern about ensuring the long-run future goes as well as possible. The most common language to refer to this cluster of views was just to say something like ‘people interested in x-risk reduction.’” Since the early 2000s, Yudkowsky’s work has focused on reducing the “existential risks” posed by AGI. In a very straightforward sense, then, Yudkowsky was a longtermist before “longtermism” was a thing. Or consider a LessWrong post of his from 2013, in which he writes: “I regard the non-x-risk parts of EA as being important only insofar as they raise visibility and eventually get more people involved in, as I would put it, the actual plot.” In other words, he’s saying that what matters is EA-longtermism; the other “cause areas” of EA matter because of their ability to generate interest in the longtermist project of ensuring that there are posthumans (quoting him again) “100,000,000 years” from now.

As Wikipedia (accurately) notes: “In December 2012, the [Singularity Institute] sold its name, web domain, and the Singularity Summit to Singularity University, and in the following month took the name ‘Machine Intelligence Research Institute.’” The Singularity University was founded by Ray Kurzweil and Peter Diamandis, both TESCREALists. Elon Musk has some links with the Singularity University, and just recently, in 2024, “spoke during a fireside chat with Peter Diamandis at the Abundance 360 Summit, hosted by Singularity University, a Silicon Valley institution that counsels business leaders on bleeding-edge technologies.”

In addition to his talk at an EA Global conference, Bostrom has also presented at the Singularity Summit, where he was introduced by Peter Thiel. Others on the same stage include Max more, the founder of Extropianism and modern transhumanism, Eliezer Yudkowsky, the transhumanist Extropian singularitarian who founded Rationalism, and Ray Kurzweil, the leading singularitarian transhumanist.

In fact, Drexler’s writings about techno-utopia (e.g., in his 1986 book Engines of Creation) made him “something of a patron saint among Extropians,” according to a 1994 article in Wired.

As this indicates, the TESCREAL movement—especially its leadership—is overwhelmingly male, and mostly white. It is not a diverse community.

This website was discovered by two colleagues of mine: Keira Havens and Nicholas Rodelo.

AI safety emerged out of the TESCREAL movement as an answer to the question: “How should we proceed with building AGI given that it could pose a grave threat to humanity?” One possible answer is: “Don’t build AGI—ever.” This seems to have been the position that Bill Joy was advocating in his famous 2000 Wired article. In contrast, the TESCREAL answer was: “We can’t never build AGI, because AGI is probably necessary to realize the techno-utopian world among the stars that we hope to bring about. Hence, we should create a new field of study that focuses on understanding and mitigating the existential risks of AGI, to ensure that the AGI we do—and must—eventually build delivers us to paradise rather than inadvertently destroying humanity as an unintended consequence of ‘misaligned’ goals.” Bostrom, Yudkowsky, and other leading TESCREALists thus set out to found “AI safety” as a field. As Bostrom recently stated in an article about the Future of Humanity Institute, “we were the first in academia to develop the fields of AI safety and AI governance.”

The field of “AI safety” contrasts with the field of “AI ethics,” which is not associated (or at least not in any direct way) with the TESCREAL movement. I was once aligned with the AI safety field, when I was a TESCREAList, but now consider myself squarely within AI ethics.

This has been lightly edited to improve readability.

For example, the poster boy of EA-longtermism, William MacAskill, writes in his book What We Owe the Future that Karnofsky is one of the people “who had a particularly profound impact on my broader thinking about longtermism.”

That said, as Beth Singler notes in her forthcoming book Religion and Artificial Intelligence,

“Western transhumanists” have however lauded the Cosmists. Michel Eltchaninoff, author of the book Lenin Walked on the Moon: The Mad History of Russian Cosmism (2023), claims that “Californian transhumanism draws on very diverse sources, but it recognizes its debt to Nikolai Fyodorov. Elon Musk cites Konstantin Tsiolkovsky as a role model” (LeTemps 2022).

Indeed, in Musk’s words: “[Tsiolkovsky] was amazing. He was truly one of the greatest. … At SpaceX, we name our conference rooms after the great engineers and scientists of space, and one of our biggest conference rooms is named after Tsiolkovsky.”

Though I am a fan of the author’s work.

I do not know if Hughes has a forthcoming book. I am just using this as an example.

An amusing response to Hughes and Sennesh’s article came from Anders Sandberg, who tweeted a link to the article along with the comment, “a fun leftist take … on why TESCREAL is a conspiracy theory missing actual critique.” This is amusing because of the irony: Sandberg is a prominent transhumanist who was very active in the Extropian movement, writes about the Singularity, has given talks at the Singularity Summit, has had close ties with the Rationalist community and been commenting on the LessWrong website since 2008, and has spent most of his career at the Future of Humanity Institute, which shared office space with the Centre for Effective Altruism before Oxford shut it down. Sandberg has his very own entry on the official Effective Altruism website, and explicitly considers himself to be a longtermist. In other words, Sandberg is at the very heart of the TESCREAL movement, yet calls it—without any basis in reality—a “conspiracy theory.”

More specifically, the genealogy of EA has two main lineages: first, the transhumanist movement, and second, the “global ethics” of Peter Singer, a eugenicist who has become one of the leading figures within EA. The influence of the first is most clearly seen in EA’s invention of and turn toward longtermism, while the influence of the second is seen in EA’s alternative “cause areas” of global poverty and animal welfare (as Singer was also instrumental in starting the animal rights movement in the Western world).

In more detail, Robin Hanson has been a transhumanist since the 1990s. (He is also a Men’s Rights activist, and was once described as the “creepiest economist in America” by Slate.) In the 1990s, Hanson was heavily involved in the Extropian movement, and subsequently held a position at Nick Bostrom’s now-defunct TESCREAL organization, the Future of Humanity Institute from 2007 to 2024. He is also a longtermist, writing on Overcoming Bias: “Will MacAskill has a new book out today, What We Owe The Future, most of which I agree with, even if that doesn’t exactly break new ground.” What We Owe the Future was written to be the authoritative non-academic text on longtermism. He continues: “Yes, the future might be very big, and that matters a lot, so we should be willing to do a lot to prevent extinction, collapse, or stagnation. I hope his book induces more careful future analysis, such as I tried in Age of Em. (FYI, MacAskill suggested that book’s title to me.)”

Of note is that Ord created a LessWrong account in 2009, while MacAskill, who Ord introduced the idea of existential risks to around the same time, began posting on LessWrong in 2012.

Beckstead was a member of the EA community since at least 2010.

In fact, one of the names that was seriously considered for the community, before a handful of early members voted on “Effective Altruism,” was “Effective Utilitarian Community.”

This view is exemplified by the “Great Filter” framework that has been extremely influential among TESCREALists. It was developed by Robin Hanson; see footnote #18 for more on Hanson.

As Toby Ord, for example, writes in his book on existential risks and EA-longtermism, “either humanity takes control of its destiny and reduces [existential] risk to a sustainable level, or we destroy ourselves.”

Others in the EA community strongly disagreed; e.g., here. But the very fact that such EAs felt the need to say, in a public forum, that community members should reject racism and eugenics is indicative of the social environment in which they are embedded. If racist and eugenic views were not widespread, there would be no need to argue against them. As an anonymous white person in the community told researchers for a study titled “Diversity and Inclusion in Existential Risk Studies,” “I think it’s a witch hunt; I think that Bostrom has nothing to apologise for and I think that the whole things is a disgrace. … However, this is a topic where I feel very reluctant to speak publicly because I sense that these views are not welcome.”

As I write here, “the so-called ‘Twelve Tables’ of Roman law “made provisions for infanticide on the basis of deformity and weakness.’” Peter Singer’s view is an affirmation of this eugenic idea that “defective” infants should be killed.

As it happens, some of Huxley’s writings about “scientific humanism” were published by the “Rationalist Press Association,” an interesting terminological connection with the “Rationalist” community founded by Eliezer Yudkowsky.

However, this should be qualified because many TESCREALists, especially within the doomer camp, believe that we are passing through a period of heightened—and indeed unprecedented—existential risk. This period, which could be likened to the apocalypse, is called the “Time of Perils.” Once we get through the Time of Perils by safely inventing advanced technologies, including superintelligent machines and molecular nanotechnology, and colonizing space, we will then enter a world of far less existential risk. We will be safe. In this sense, the other side of the apocalyptic valley through which we are currently treading is paradise, which parallels traditional Christian eschatology much more closely.

As one EA writes, “the effective altruism community is very demanding. It has a say over how I spend my money (10% to the All Grants Fund this year). It has a say over my career choices (saving a shrimp near you). It has a say over my diet (which I grieve any time I smell non-vegan cookies at a bakery). Heck, it has a say over my discourse norms (you have no idea how many fun blog posts got ruined by fact-checking).” For more on “saving a shrimp near you,” see the “Shrimp Welfare Project.” Note also that the paragraph in which this footnote appears draws from this article on the EA Forum.

Indeed, a prominent transhumanist and former member of the Rationalist community (who gave talks at the Singularity Summit and raised hundreds of thousands of dollars for the Singularity Institute) told me in late 2022 that the Bay Area Rationalist community, in particular, has become a “full grown apocalypse cult.”

For a trenchant critique of the Singularity idea, see David Thorstad’s paper “Against the Singularity Hypothesis,” here.

As I note in my 2024 book Human Extinction, “both journals were run by the World Transhumanist Association that Bostrom cofounded in 1998, and indeed Bostrom was the first editor-in-chief of the Journal of Transhumanism.”

Note that the central idea of the paper was not original to Bostrom. It drew from an earlier paper by Milan Ćirković, published in the same journal.

By analogy, imagine that the term “existential risk” had been introduced by an “anarcho-primitivist” (as they call themselves) to mean any event that prevents us from returning to the lifeways of our hunter-gatherer ancestors (“Back to the Pleistocene!”). If that is how the term were defined, then most people would no doubt say, “I do not care about mitigating ‘existential risks.’” I say the same thing about the TESCREAL definition of this term.

To be more precise, even if the TESCREAL literature were to take seriously these alternative perspectives on the future—which it doesn’t—that still would not be enough. People representing these alternative perspectives would have to be included within the process of designing our collective future.